15+ Best Google Search Scrapers and APIs [2025 Edition]

(updated)

Wanna extract all search results from Google but don’t know how to do it? Doing it manually is time-consuming and inefficient. But there are ready-made scrapers and APIs to do that.

In this article, we'll compare the best Google search scrapers and APIs. Before that, let’s define a Google SERP scraper and its use cases for those who don’t know.

What is a Google Search Results Scraper?

A Google Search Results Scraper or SERP scraper is a tool that automatically extracts data (URLs, titles, snippets, etc.) from search engine result pages.

Why Scrape Google Search Results?

For example, an investigative journalist wants to do a story on environmental damage. Lithium mining can be a good start.

We can start our research by entering the “lithium mining environmental damages” keyword in our search engine.

A Google SERP scraper can make it easy and way faster to collect all the articles from various regions. This can save our Journo a lot of time.

Other use cases of Google Search Scraper include:

- Market Research

- SEO (Search Engine Optimization)

- Content Marketing

- Lead Generation

- Price Monitoring

- Academic Research

- Journalism

But is scraping Google SERP data legal?

Is it legal to scrape Google search results?

Now let’s explore the best SERP scrapers and compare them. But how did I shortlist these tools?

How to choose the best Google search scraper?

To find the best SERP scraping solution for you, I started my quest with a simple Google search. Yeah, no magic mwwan, no enchantments. All it takes is a Google search.

But there are too many of them. How to find the one that actually works? It was a little hard to find working no-code scrapers.

So after a few hours of searching, I compiled a list of 12 no-code scrapers to test. I compared them based on:

- What data attributes do they extract?

- What’s their speed per minute

- How easy are they to use?

- What cool features do they offer?

- How much do they cost?

For a better comparison, I also tested a few SERP APIs, we’ll explore them as well. But what’s the difference between SERP APIs and no-code SERP scrapers?

No-code SERP Scrapers vs SERP APIs

With no-code scrapers, you don’t need any coding skills. They offer a user-friendly interface with different options. Just a few clicks to configure your scraper, and voila! You’re ready to go.

SERP APIs are for nerds. They offer a more programmatic approach. You access data through code calls which obviously requires a solid understanding of programming.

Which one’s better? Let’s create a comparison table:

| Feature | No-Code Scrapers | SERP APIs |

|---|---|---|

| Ease of Use | Very easy, visual interface | Moderate (requires some technical understanding) |

| Coding Required | No | Yes (basic coding knowledge needed to integrate) |

| Scalability | Limited (often have fixed data plans or usage caps) | High (can handle large data volumes with flexible plans) |

| Price Competitiveness | Usually cheaper (often subscription-based) | Can vary (pay-per-request or tiered pricing models) |

| Customization | Limited (pre-built, less control over data format) | High (full control over data extraction and format) |

| Maintenance & Support | Limited (rely on vendor for updates and troubleshooting) | More independent (can manage integration and updates yourself) |

| Suitable for: | Users with no coding experience, quick data extraction needs | Developers, building apps with SERP data integration |

But in this article, I’ve covered both. I’m going to compare all no-code tools I found, and some of the best SERP APIs in my experience.

Let’s start with the best no-code scrapers.

Best no-code Google search scrapers

For this article, I’ve shortlisted 15 no-code SERP scrapers. Let’s explore their features, pricing, pros and cons.

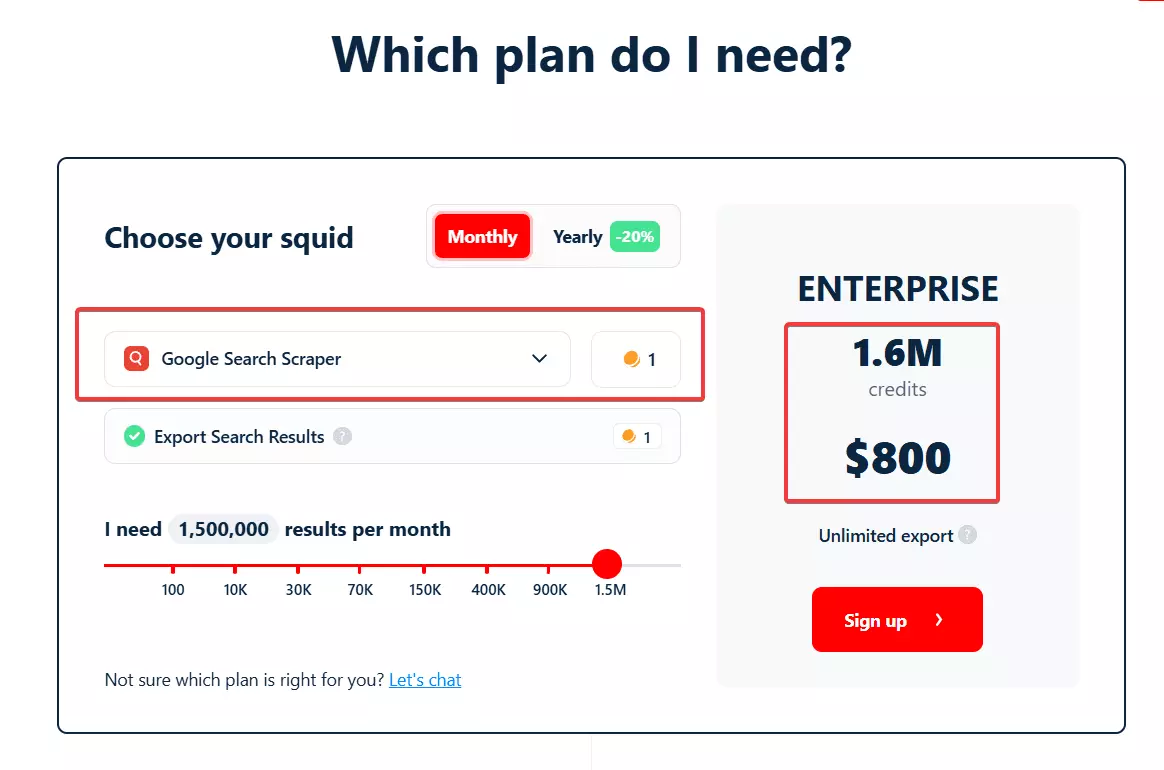

1. Lobstr.io

Ease of use

Lobstr.io is super easy to use. The user-interface is simple and clean. You can launch the scraper in less than 2 minutes.

All you have to do is – choose the scraper, add URLs, and bingo! You’re all set to extract SERP data.

You can add search URLs manually or upload them in bulk. Settings menu is pretty straightforward. You also get a live console to monitor data extraction.

Cool features

- 13 data attributes including ads, PAA, and related searches

- City, country, and language filtering

- 130+ results per minute speed

- Cloud-based, no installation required

- Schedule feature to automate scraping

- Developer-ready API

- Google Sheets, Amazon S3, SFTP, and Webhook integration

Pricing

- Free tier: 100 results per month

- Premium tier: $0.5 per 1000 results

| Pros | Cons |

|---|---|

| Easy to use | Only supports .csv format for downloading |

| Can extract both organic and paid results | |

| People also ask, related queries included | |

| Affordable | |

| City/State filters | |

| Scheduling | |

| API access |

Best for

Due to affordable pricing and cool features, Lobstr.io is suitable for both businesses and individuals. You can use it for SEO and data collection at scale.

2. Apify

Ease of use

Apify’s user interface is a bit cluttered. But once you understand how every option works, it’s super easy to use. You can enter URLs or search queries or both as inputs.

Though Apify supports city/region filters but it can be confusing for non-techies. You’ll have to convert the city name into a UULE parameter.

Not many people know what a UULE parameter is. Definitely not straightforward.

Apify also provides a live monitor to check extraction results. You can also get the SERP API from Apify and integrate it to your applications.

Cool features

- 15 data attributes including ads, PAA, and related queries

- City, country, and language filtering

- 500+ results per minute speed

- Cloud-based

- Schedule feature

- Multiple download options including csv, json, xml

- Multiple 3rd party integrations

- API access

Pricing

- Free tier: $5 per month

- Premium tier: $0.05 per 1000 results

| Pros | Cons |

|---|---|

| Affordable | No bulk upload for inputs |

| Super fast | Need to manage IPs? |

| API access | |

| Multiple data export formats and integrations |

Best for

Apify is affordable and offers data collection at scale. With lots of integrations, it’s suitable for businesses of all sizes.

3. Oxylabs

Ease of use

While Oxylabs scraper is a bit more involved compared to the other tools on this list, it provides much more control on the output. Moreover, the large amount of sources available enables the users to scrape all of Google’s products, including shopping, news, trends and more.

Cool features

- Up to 5,000 URLs per batch

- City, country, and language filtering

- 3000 results per minute speed

- Cloud-based

- Schedule feature

- Anti blocking technology

- API access

Pricing

- Free tier: up to 2,000 results

- Cheapest tier: $1 per 1000 pages

| Pros | Cons |

|---|---|

| API access | Advanced features may require technical knowledge |

| Bypass geo-restrictions and anti-bot systems | |

| Pay only for successful results | |

| Custom parser: Independently write parsing instructions and parse any target, no need to maintain your own parser. | |

| Cloud based with scheduling |

Best for:

Businesses that require high-volume Google SERP data extraction with advanced features like custom parsing and scheduling.

4. Outscraper

Based in the USA, Outscraper is a data scraping company providing plenty of no-code web scrapers and scraping APIs.

Ease of use

Outscraper only supports search queries. You can either enter them manually or upload them in bulk. The user interface is pretty straightforward, nothing much to configure.

Just like Apify, getting location specific results is not straightforward in Outscraper. You’ll need a UULE parameter. This makes it complicated for non-techie users.

You can also enrich results with other services like email and contact scraper, email verifier, and disposable mail checker.

There’s no live console or results tracking. You don’t know when the job will finish and can’t track real-time progress.

Cool features

- 6 data attributes

- 10 results per minute

- Schedule feature

- Cloud-powered

- Export data to .csv

- Offers SERP API

- Integrates to Webhook, Zapier, and Hubspot

Pricing

- Free tier: 25 results per search

- Premium tier: $3 per 1000 pages

| Pros | Cons |

|---|---|

| Cloud based with scheduling | Only 6 data attributes |

| Extracts organic, ads, related, and PAA | Extremely slow |

| Email and contact details enrichment | Expensive |

| Easy to use | |

| SERP API |

Best for

If you prefer extracting SERP data at super speed, Outscraper is not an ideal choice. It’s good for lead generation but truly an expensive tool.

5. Hexomatic

Ease of use

Hexomatic offers a really clean and easy to use user interface. You can add a search query, select total results and start scraping.

You can’t just enter a city to get local results. You’ll have to specify coordinates of the area or select the country instead. This makes it a little complicated.

Also, the tool can’t extract related queries and people also ask questions. But a really cool feature is – you can add AI tools like GPT and Bard to your workflow.

Cool features

- 10 data attributes, both paid and organic

- Multiple 3rd party integrations

- Export data as csv, xlxs, json

- Cloud-based and AI-powered

- Schedule option available

Pricing

- No free trier

- Premium tier: $4 per 1000 results

| Pros | Cons |

|---|---|

| Cloud-based and AI-powered | No related/PAA results |

| Connects to AI models | No free plan |

| Multiple export and integration options | Speed is uncertain |

| Offers Chrome and Firefox addons | Expensive |

Best for

Hexomatic can be used as a SEO and analysis tool due to its AI integrations. But the expensive pricing plans make it unsuitable for small businesses.

6. Botster

Ease of use

Botster is really easy to set up. All you need to do is; enter a query, select total results, and pinpoint the location. Your bot is ready to roll.

You can target specific locations for local results using location coordinates. But unlike other tools, you don’t have to find them. You can pinpoint the location in the embedded map.

You can sync the scraper to Slack, and invite your team members to view and analyze data in the Botster dashboard.

Cool features

- 5 data attributes

- 20 results per minute

- Integrates to Slack, Google Drive, and other services

- Schedule feature

- Export to csv, json, xlxs

- API access

Pricing

- Free tier: 15 results per search

- Premium tier: $5 per 1000 results

| Pros | Cons |

|---|---|

| Cloud based with schedule | Slow |

| Multiple integrations | Expensive |

| Multiple export options | Only organic results |

| Map feature |

Best for

Botster, despite being expensive, is a good SEO tool. It offers tracking top results. But you can’t use it for data collection at scale.

7. Scrape-It

Ease of use

Scrape-it cloud is one of the simplest scrapers available. You get a simple, minimalistic, clean user interface with not many options.

All you have is 3 options i.e. search queries, max results, and country. It doesn’t support language and region filters.

There’s no live console, results tracking, and schedule features. For extracting data at scale, you’ll have to use the SERP API.

Cool features

- 10 data attributes

- 350+ results per minute

- API access

- Export data to csv, json, xlxs

Pricing

- Free tier: 1000 results/API calls

- Premium tier: $0.1 per 1000 results

| Pros | Cons |

|---|---|

| Super fast | No schedule |

| Easy to use | No ads, related, and PAA |

| Affordable | No integrations |

| Multiple export options | No location and language |

Best for

As far as the no-code variant is concerned, it’s good for personal use only. You can’t use it to collect data at scale. The real deal is scrape-it’s SERP API.

8. No Data No Business

Ease of use

Though ImportFromWeb offers ready-made templates, it's still not easy to use. It actually adds a formula to your Google sheet.

You can add URL, data attributes to extract, filters like language, max results. Once you’re done with parameters, the formula will load results from Google SERP.

For people also ask and related searches, you’ll have to add additional formulas. ImportFromWeb also offers templates and demos to make it easy for beginners.

Cool features

- 5 data attributes

- Direct Google Sheet import

- Supports URL and search query

- Customizable

Pricing

- Free trial: 200 results

- Premium tier: $15 per 1000 results

| Pros | Cons |

|---|---|

| Direct import to Google Sheets | No schedule |

| Supports Google suggestions | Too expensive |

| Customizable | Nerdy and difficult to use |

| Very slow |

Best for

The only use case I could think of is price comparison and product research. The add-on is super expensive and doesn’t offer a lot of features.

9. Octoparse

Ease of use

Using the pre-built template saves you a lot of time. You can select a language, add up to 10 keywords, and start collecting data. It’s easy to set up.

Octoparse works as an automated browser for you. Once it’s launched, it’ll open a browser, tweak it according to the input, and start collecting data.

You can also customize it by using visual scraper instead of template. But it is a complex process and might give you a headache while setting pagination behavior.

There’s no country and region filter in the tool.

Cool features

- 5 data attributes

- 100 results per minute

- Schedule feature

- Cloud-based

- Customizable

- API access

- Multiple export options

Pricing

- Free tier: Limits undefined, but no IPs, cloud, and scheduling

- Premium tier: $250/mo + extra cost for proxies (limits not defined)

| Pros | Cons |

|---|---|

| Easy to use | Expensive |

| Schedule and cloud support | Only 5 data attributes |

| Visual scraping support | No ads, PAA, related searches |

| API access | 10 keywords per task |

| Fast | No country, region filter |

Best for

Octoparse is a good lead generation tool. You can’t use if for SEO due to limited filters. Since the limitations are unknown, I don’t know how it’ll perform while collecting data at scale.

10. Axiom AI

Ease of use

Axiom is not easy to use. Configuring a bot to scrape Google search results is a complete headache. There’s no pre-built workflow, you’ll have to configure everything manually.

The pre-built workflows don’t work properly. You’ll have to configure a new workflow and manually set up everything.

Cool features

- 5 data attributes

- Export to csv

- 5 results per minute

- Cloud-based with Desktop app support

- Integrates to Google Sheet, Zapier, and other 3rd party solutions

- Works with ChatGPT

- Schedule option

Pricing

- Free trial: 600 results

- Premium tier: $5.5 per 1000 results

| Pros | Cons |

|---|---|

| Cloud-based | Steep learning curve |

| Schedule available | Expensive |

| AI powered | Extremely slow |

| Multiple integrations |

Best for

Axiom is not suitable for data collection at scale. It’s good for limited data collection for analysis like top 10 results monitoring only.

11. ScrapeHero

Ease of use

ScrapeHero is really easy to use. It offers a minimalistic user interface. All you have to do is – enter a keyword, and select total results to scrape.

It doesn’t support country, region, and language filters. You get a pretty basic progress dashboard for tracking progress and viewing extracted results.

Cool features

- 10 data attributes including paid, organic, and related

- 75 results per minute

- Cloud-based

- Schedule option available

- Export data as csv, json, xlxs

- Integrates to Dropbox, Amazon S3, Google Drive

- API access

Pricing

- Free trial: 250 results

- Premium tier: $0.63 per 1000 results

| Pros | Cons |

|---|---|

| Cloud-based | No country, region, language filter |

| Schedule supported | No PAA data |

| Fast | |

| Affordable | |

| API access |

Best for

ScrapeHero is good for extracting data at scale. It’s fast and affordable too. But it’s not suitable for SEO related use cases as it doesn’t support country or language filters.

12. ScrapeStorm

Ease of use

ScrapeStorm offers 2 different modes. The flowchart mode is difficult to set up and fully customizable. Smart mode uses AI to detect webpage contents and extract data.

Using smart mode is super easy. You just need to enter a URL. It’ll automatically detect webpage content, pagination, and other elements.

But smart mode is not always accurate, it often misses important data attributes. You can configure it manually using flowchart mode, which is too complicated.

Cool features

- 5 data attributes

- 50 results per minute

- Schedule option available

- Export to csv, txt, xlxs

- Export to Google sheet and local databases

Pricing

- Free tier: 3000 results per month

- Premium tier: $1.67 per 1000 results

| Pros | Cons |

|---|---|

| Cloud-based | No integrations |

| AI powered smart mode | Steep learning curve |

| Multiple data export options | Limited data attributes |

| Multiple local databases support | |

| Affordable pricing |

Best for

Being a visual scraper, ScrapeStorm is good at extracting limited data. You can’t use it to scrape data at scale. It’s a good tool for price intelligence, and rank monitoring.

13. Parsehub

Ease of use

Parsehub is not at all an easy to use software. You’ll need to go through the training material to master this scraping tool. There’s no pre-built template for scraping Google SERPs.

Parsehub is the only visual software in the list that can extract whatever data you want. It can be customized to extract each and every element from a webpage.

The only difficult part is pinpointing data attributes. After selecting data attributes and pagination, you can just click “Get Data” and Parsehub will start data collection.

Cool features

- Cloud-based

- Up to 13 data attributes

- 100 results per minute

- Schedule option available

- API access

- Export data as csv, json, xlxs

- Integrates to Dropbox and Amazon S3

Pricing

- Free tier: 200 results per run

- Premium tier: $600 per month, Unlimited results

| Pros | Cons |

|---|---|

| Cloud based | Steep learning curve |

| Schedule available | Not suitable for beginners |

| API access | Limited integrations |

| No limitations | |

| Fast |

Best for

Parsehub is best for nerds. If you’re familiar with web page structure, selectors, and HTML elements, you can use Parsehub pretty well. For beginners, it’s too hard to handle.

So these were no-code Google SERP scrapers I found and tested. But as I stated earlier, it was hard to find no-code scrapers. Why?

Because most of the results were related to SERP APIs. So why not compare some best SERP APIs that actually work? Let’s do it!

Best Google SERP APIs

Many no-code tools covered above offer developer-ready APIs like Lobstr.io, Apify, Scrape-it etc. They’re pretty great but the issue is scalability and speed.

In this section, we’re going to explore 5 SERP APIs specifically for collecting data programmatically. We’re going to compare them based on these factors:

- Scalability

- Speed

- Pricing

- Documentation

Let’s go 🏃

1. SERP API

Features

- SERP API offers up to 4 million searches per month

- It also provides a range of related APIs like maps, news, jobs, shopping, etc

- Average response time is 3 seconds

- Offers rich, elaborated, and well-structured documentation

- Offers a playground with feature rich interface

Pricing

- SERP API costs $0.002 per API request

- You’re not charged for failed requests

| Pros | Cons |

|---|---|

| Fast | Little expensive |

| Good for high volume | |

| Clean documentation | |

| Won’t charge for failed requests and errors | |

| Offers range of related APIs |

2. Zenrows

Features

- Up to 3 million requests per month

- Average response time is 4 seconds

- Offers easy to understand documentation

- Provides a basic API playground

Pricing

- Costs $0.0008 per API request (with proxy)

- Doesn’t charge for failed requests

| Pros | Cons |

|---|---|

| Good for high volume | Little slow |

| Affordable pricing | Playground is too basic |

| Failed requests won’t count | |

| Clean documentation |

3. DataForSEO

Features

- Up to 1 million searches per month

- Average response time is 9 seconds

- Documentation is little messy

- Offers a user-friendly API playground

- Offers related APIs for other SEO tasks

Pricing

- Costs $0.0006 per API request

- Price varies with update frequency

| Pros | Cons |

|---|---|

| Best for SEO | Messy documentation |

| Affordable | Slow |

| User-friendly code playground | Failed requests are counted |

| Can handle high volume |

4. Bright Data

Features

- Up to 500k searches per month

- Average response time is less than 2 seconds

- Offers clear and well-structured documentation

- Provides a user-friendly API playground

- Provides a specialized web scraping IDE

Pricing

- Costs $0.002 per API request

- You’re charged for both successful and failed requests

| Pros | Cons |

|---|---|

| Reliable | Expensive |

| User friendly code playground | Failed requests are charged |

| Dedicated scraping IDE | Not for high volume |

| Fast |

5. ScraperAPI

Features

- Handles high volume

- Large 50M+ IP pool for geo-targeting & avoiding blocks

- Provides structured JSON results

- Reliable performance with automatic retries

- Clear documentation and easy integration

Pricing

- Costs start around $0.003 per successful API request

- Pay-per-successful-request model

| Pros | Cons |

|---|---|

| Simplifies scraping | Cost varies by features |

| High success rates | Confusing pricing tiers |

| Structured Google data | |

| Scalable | |

| Pay only for successful requests |

These were 5 best SERP API services that I tested personally. All of them offer a free trial for testing. You can try them with python, nodejs, or any programming language you love.

As I mentioned earlier, you can try the APIs provided by no-code tools too.

Conclusion

That’s a wrap on our list of 15+ best Google SERP scrapers and APIs. Overall, Lobstr.io and Apify are the best no-code SERP scrapers in my opinion.

Both tools offer tailor-made and affordable no-code Google SERP scrapers as well as developer-ready SERP APIs.

For the SERP API, I’ll choose Zenrows. It’s affordable, scalable, and has beautiful documentation.

You can try all the scrapers and APIs yourself and choose the one that fits your needs.